LoTIS: Learning to Localize Reference Trajectories in Image-Space for Visual Navigation

Abstract

We present LoTIS, a model for visual navigation that provides robot-agnostic image-space guidance by localizing a reference RGB trajectory in the robot's current view, without requiring camera calibration, poses, or robot-specific training. Instead of predicting actions tied to specific robots, we predict the image-space coordinates of the reference trajectory as they would appear in the robot's current view. This creates robot-agnostic visual guidance that easily integrates with local planning. Consequently, our model's predictions provide guidance zero-shot across diverse embodiments. By decoupling perception from action and learning to localize trajectory points rather than imitate behavioral priors, we enable a cross-trajectory training strategy that learns robust invariance to viewpoint and camera changes. We outperform state-of-the-art methods by 20-50 percentage points in success rate on forward navigation, and paired with a local planner we achieve 94-98% success rate across diverse sim and real environments. Furthermore, we achieve over 5x improvements on challenging tasks where baselines fail, such as backward traversal. The system is straightforward to use: we show how even a video from a handheld phone camera directly enables different robots to navigate to any point on the trajectory.

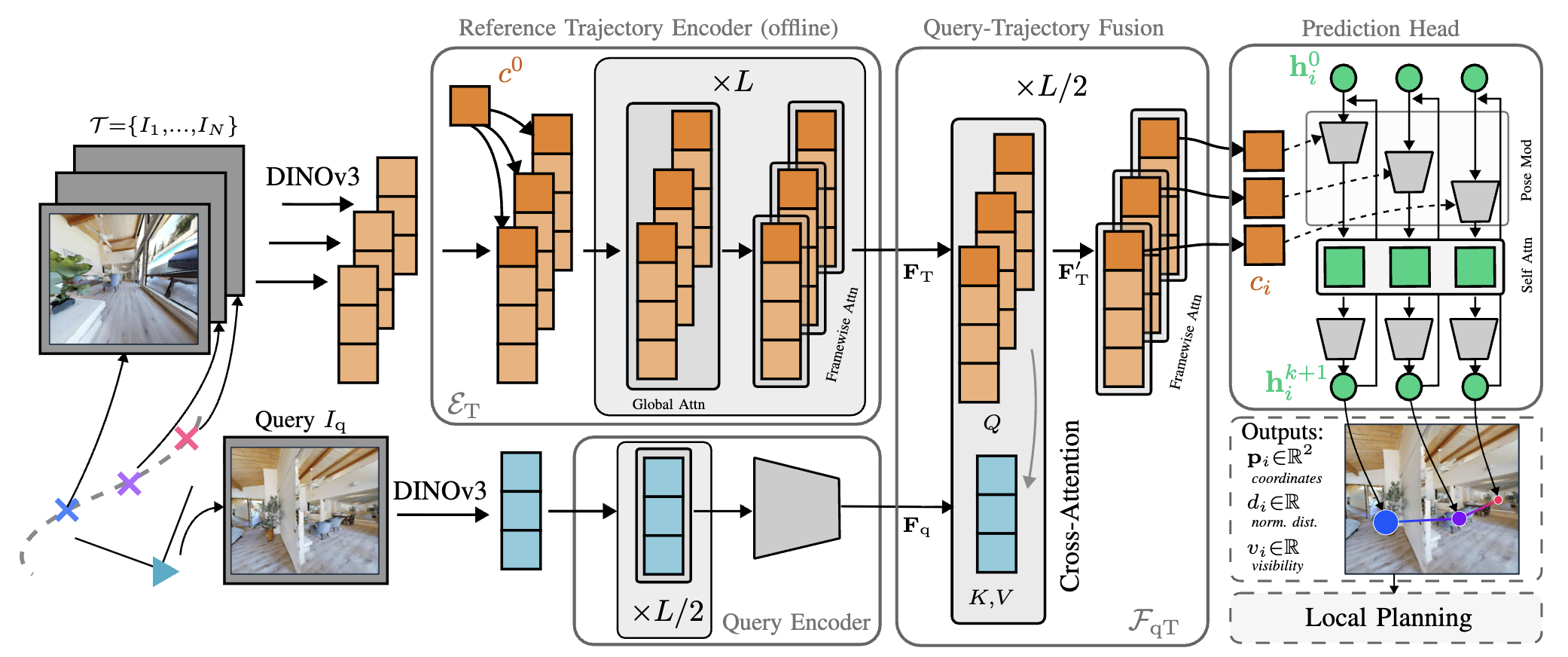

Method

LoTIS decouples perception from action by predicting where a reference trajectory appears in the robot's current view, rather than predicting robot-specific actions. For each frame in the reference trajectory, our model outputs: (1) the 2D image coordinates where that pose would appear, (2) whether it's visible, and (3) its normalized distance. This robot-agnostic representation interfaces directly with any local planner, enabling zero-shot transfer across embodiments, from drones to quadrupeds, using the same phone-recorded trajectory. A cross-trajectory training strategy, where reference and query images come from different trajectories, teaches robustness to camera mismatch and enables backward traversal where prior methods fail.

Experiment Gallery

Reference trajectories recorded with a handheld phone. The goal is to reach the end of the trajectory (forward) or the start (backward) from arbitrary starting positions with visual overlap.

Reference Trajectory

Navigation Runs

Start 0 - Forward

Start 0 - Backward

Start 1 - Forward

Start 1 - Backward

Start 2 - Forward

Start 2 - Backward

Start 3 - Forward

Start 3 - Backward

Start 4 - Forward

Start 4 - Backward

Start 5 - Forward

Start 5 - Backward

Robustness Experiments

LoTIS maintains strong performance under challenging real-world conditions.

People Occlusions (Drone 🚁)

Same Indoors 2 reference trajectory as above, but with people walking through the scene and occluding the view.

Start 0 - Forward

Start 0 - Backward

Start 1 - Forward

Start 1 - Backward

Start 2 - Forward

Start 2 - Backward

Start 3 - Forward

Start 3 - Backward

Start 4 - Forward

Start 4 - Backward

Start 5 - Forward

Start 5 - Backward

Nighttime (Quadruped 🐕)

Same Outdoors 2 reference trajectory (recorded during daytime), but evaluated at night with scene changes.

Start 1 - Forward

Start 1 - Backward

Start 2 - Forward

Start 2 - Backward

Start 3 - Forward

Start 3 - Backward

Kilometer-Scale Navigation

LoTIS scales to long-range outdoor trajectories.

BibTeX

@article{lotis2025,

title={LoTIS: Learning to Localize Reference Trajectories in Image-Space},

author={Anonymous},

journal={Under Review},

year={2025}

}